자유게시판

Deepseek: One Query You don't Need to Ask Anymore

페이지 정보

본문

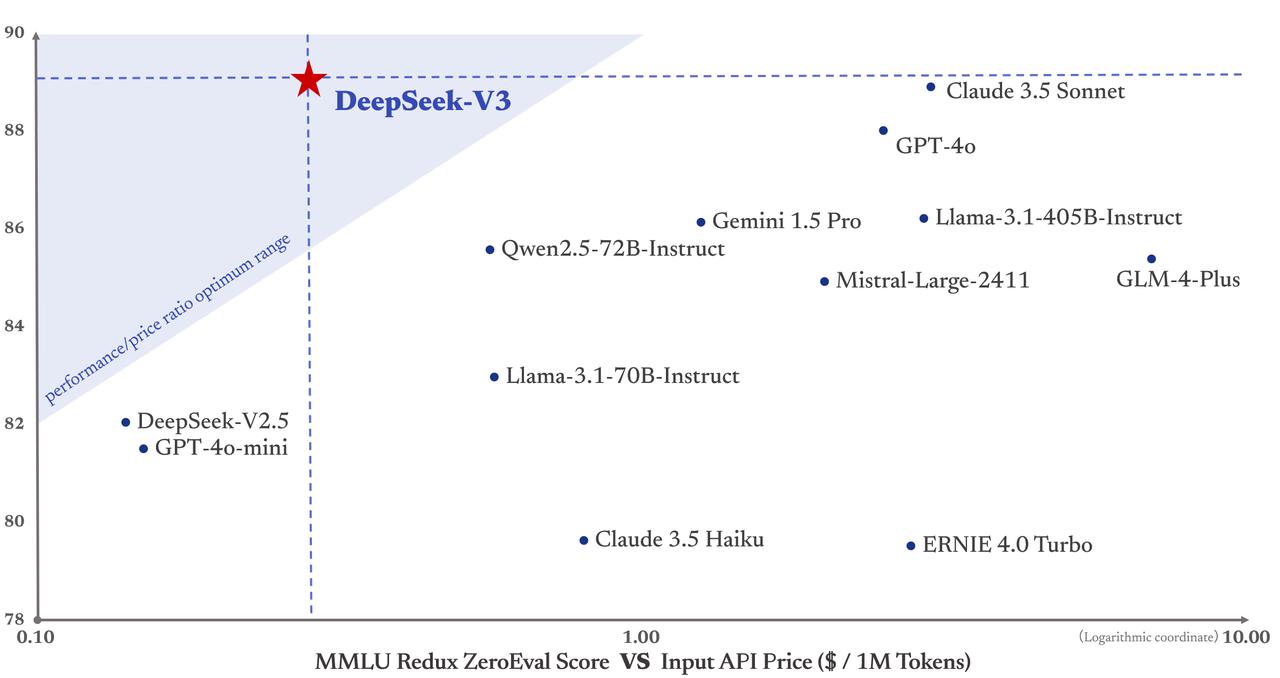

The DeepSeek App is engineered to be a robust tool within the arsenal of any tech enthusiast, developer, or researcher. DeepSeek has develop into an important device for our product development process. 1. Cost-Efficiency: DeepSeek’s growth prices are considerably decrease than competitors, doubtlessly leading to extra reasonably priced AI options. 3. Open-Source Approach: Publicly out there mannequin weights, encouraging collaborative growth. Open-Source Availability: DeepSeek affords better flexibility for developers and researchers to customise and construct upon the model. When comparing DeepSeek vs OpenAI, I discovered that DeepSeek presents comparable performance at a fraction of the associated fee. 4. Efficient Architecture: The Mixture-of-Experts design allows for centered use of computational resources, enhancing general performance. "In 1922, Qian Xuantong, a leading reformer in early Republican China, despondently famous that he was not even forty years outdated, however his nerves have been exhausted on account of the usage of Chinese characters. DeepSeek's pronouncements rocked the capital markets on Monday resulting from considerations that future AI products will require much less-costly infrastructure than Wall Street has assumed. As export restrictions are inclined to encourage Chinese innovation due to necessity, should the U.S.

These features position DeepSeek v3 as a powerful competitor within the AI market, offering effectivity, performance, and innovation. This rapid progress positions DeepSeek as a robust competitor in the AI chatbot market. The release prompted Nvidia’s greatest single-day market drop in U.S. 10. Rapid Iteration: Quick development from initial launch to DeepSeek-V3. SGLang: Fully assist the DeepSeek-V3 model in each BF16 and FP8 inference modes, with Multi-Token Prediction coming quickly. 6. Multi-Token Prediction (MTP): Predicts a number of tokens simultaneously, accelerating inference. The story was not only entertaining but in addition demonstrated DeepSeek’s capacity to weave together a number of components (time travel, writing, historic context) into a coherent narrative. Stress Testing: I pushed DeepSeek to its limits by testing its context window capacity and ability to handle specialised duties. Seamless Microsoft 365 Integration: Works inside Word, Excel, PowerPoint, Outlook, and Teams to assist with writing, summarizing, and automating duties. Mathematical Prowess: DeepSeek constantly outperformed in mathematical reasoning duties. DeepSeek showed superior efficiency in mathematical reasoning and certain technical duties. 2. Multi-head Latent Attention (MLA): Improves dealing with of complicated queries and improves overall mannequin efficiency.

These features position DeepSeek v3 as a powerful competitor within the AI market, offering effectivity, performance, and innovation. This rapid progress positions DeepSeek as a robust competitor in the AI chatbot market. The release prompted Nvidia’s greatest single-day market drop in U.S. 10. Rapid Iteration: Quick development from initial launch to DeepSeek-V3. SGLang: Fully assist the DeepSeek-V3 model in each BF16 and FP8 inference modes, with Multi-Token Prediction coming quickly. 6. Multi-Token Prediction (MTP): Predicts a number of tokens simultaneously, accelerating inference. The story was not only entertaining but in addition demonstrated DeepSeek’s capacity to weave together a number of components (time travel, writing, historic context) into a coherent narrative. Stress Testing: I pushed DeepSeek to its limits by testing its context window capacity and ability to handle specialised duties. Seamless Microsoft 365 Integration: Works inside Word, Excel, PowerPoint, Outlook, and Teams to assist with writing, summarizing, and automating duties. Mathematical Prowess: DeepSeek constantly outperformed in mathematical reasoning duties. DeepSeek showed superior efficiency in mathematical reasoning and certain technical duties. 2. Multi-head Latent Attention (MLA): Improves dealing with of complicated queries and improves overall mannequin efficiency.

DeepSeek Chat constantly outperformed GPT-4o when it comes to response speed, notably for longer queries. You aren't allowed to display, use, or apply for registration of trademarks, domain names, and so on., associated to the aforementioned terms in any means, and you shall not perform acts that expressly or implicitly indicate the right to display, use, or in any other case deal with these identifiers to others. 36Kr: After deciding on the right folks, how do you get them up to speed? Let’s get real: DeepSeek’s launch shook the AI world. You get insights into the variety of energetic customers, chats, and the general quality of conversations. What does appear possible is that DeepSeek was in a position to distill those fashions to offer V3 prime quality tokens to train on. The platform’s artificial analysis quality speaks volumes. Real-time Web Access: Uses Google Search to offer up-to-date data and improve content accuracy. 4. Data Privacy Concerns: Questions stay about information dealing with practices and potential authorities access to user data.

These included creative writing tasks, technical problem-fixing, knowledge evaluation, and open-ended questions. In technical drawback-fixing tasks, DeepSeek showed spectacular capabilities, notably in mathematical reasoning. Its performance in specialised tasks, particularly in mathematical reasoning and technical problem-solving, is actually impressive. This smart resource allocation delivers peak efficiency whereas conserving costs down. This effectivity translates to vital price savings, with training costs beneath $6 million in comparison with an estimated $a hundred million for GPT-4. The API costs USD 0.55 per million enter tokens and USD 2.19 per million output tokens - a lot lower than competitors. 6 million, significantly lower than competitors. DeepSeek-R1 scores a formidable 79.8% accuracy on the AIME 2024 math competitors and 97.3% on the MATH-500 take a look at. DeepSeek R1 has demonstrated competitive performance on various AI benchmarks, together with a 79.8% accuracy on AIME 2024 and 97.3% on MATH-500. This stark contrast underscores DeepSeek-V3's efficiency, reaching slicing-edge performance with considerably lowered computational resources and financial investment. From a business standpoint, fundamental research has a low return on investment. DeepSeek is a Chinese company, and a few individuals fear that its AI models may need biases or reflect state-imposed censorship.

- 이전글Escorting and Public Perception: Shifting Cultural Perspectives 25.03.21

- 다음글DeepSeek aI App: free Deep Seek aI App For Android/iOS 25.03.21

댓글목록

등록된 댓글이 없습니다.